Casting a critical eye on climate models

17

January 2011 by Anil Ananthaswamy

17

January 2011 by Anil Ananthaswamy

Magazine issue 2795.

Today's climate models are more sophisticated than ever – but they're still limited by our knowledge of the Earth. So how well do they really work?

CLIMATEGATE. Glaciergate. The past year or so has been a sordid time for climate science. It started with the stolen emails, which led to allegations that climate scientists had doctored data to demonstrate that humans are responsible for global warming. Then the world's most important organisation for monitoring climate change found itself in the dock for claiming that Himalayan glaciers would disappear by 2035, without the backing of peer-reviewed research. I admit feeling - as many surely did - a sense of unease. However unfounded the allegations, however minor the infractions, they only served to further cloud the debate over whether humans are irreparably changing Earth's climate.

Trying to unpick the arguments about human culpability and what the future holds for us hinges on one simple fact: there is only one Earth. That is not the opening line of a sentimental plea to protect our planet from climate change. Rather, it is a statement of the predicament faced by climate scientists. Without a spare Earth to experiment upon, they have to rely on computer models to predict how the planet is going to respond to human influence.

Today's climate models are sophisticated beasts. About two dozen of them, developed in the US, Europe, Japan and Australia, aim to predict the evolution of our climate over the coming decades and centuries, and the results are used by the Intergovernmental Panel on Climate Change (IPCC) to inform citizens and governments about the state of our planet and to influence policy.

But there is a snag. Our knowledge about the Earth is not perfect, so our models cannot be perfect. And even if we had perfect models, we wouldn't have the computing resources needed to run the staggeringly complex simulations that would be accurate to the tiniest details.

So modellers make approximations which naturally lead to a level of uncertainty in the results. Not surprisingly, this has led some people to rightly question the role of natural variability in climate relative to human influence, and the accuracy of the models. Others argue that the uncertainties in climate models are irrelevant compared with doubts over our ability to cut carbon dioxide emissions. So who is right?

To make sense of it all, it is worth retracing the beginnings of climate science. In the late 1850s, the Irish-born scientist John Tyndall showed that certain gases, including carbon dioxide, water vapour and ozone, absorb heat more strongly than the atmosphere as a whole, which is composed mainly of nitrogen and oxygen. Later, in 1895, Swedish physicist and chemist Svante Arrhenius calculated the effect of different amounts of CO2, which makes up about 0.04 per cent of the atmosphere. From this work he predicted that doubling the CO2 concentration would warm the Earth enough to cause glaciers to retreat.

More studies followed. In 1938, English engineer Guy Callendar calculated what is now called the Earth's climate sensitivity, which is the amount by which the planet will warm for every doubling in the amount of atmospheric CO2. The figure he came up with was 2 °C.

Callendar was not without his critics, and the criticisms foreshadow those surrounding modern climate science. What about feedbacks due to increasing water vapour as the atmosphere warms? What about clouds? Would warming not increase cloud cover, which would block sunlight and thus cool the Earth?

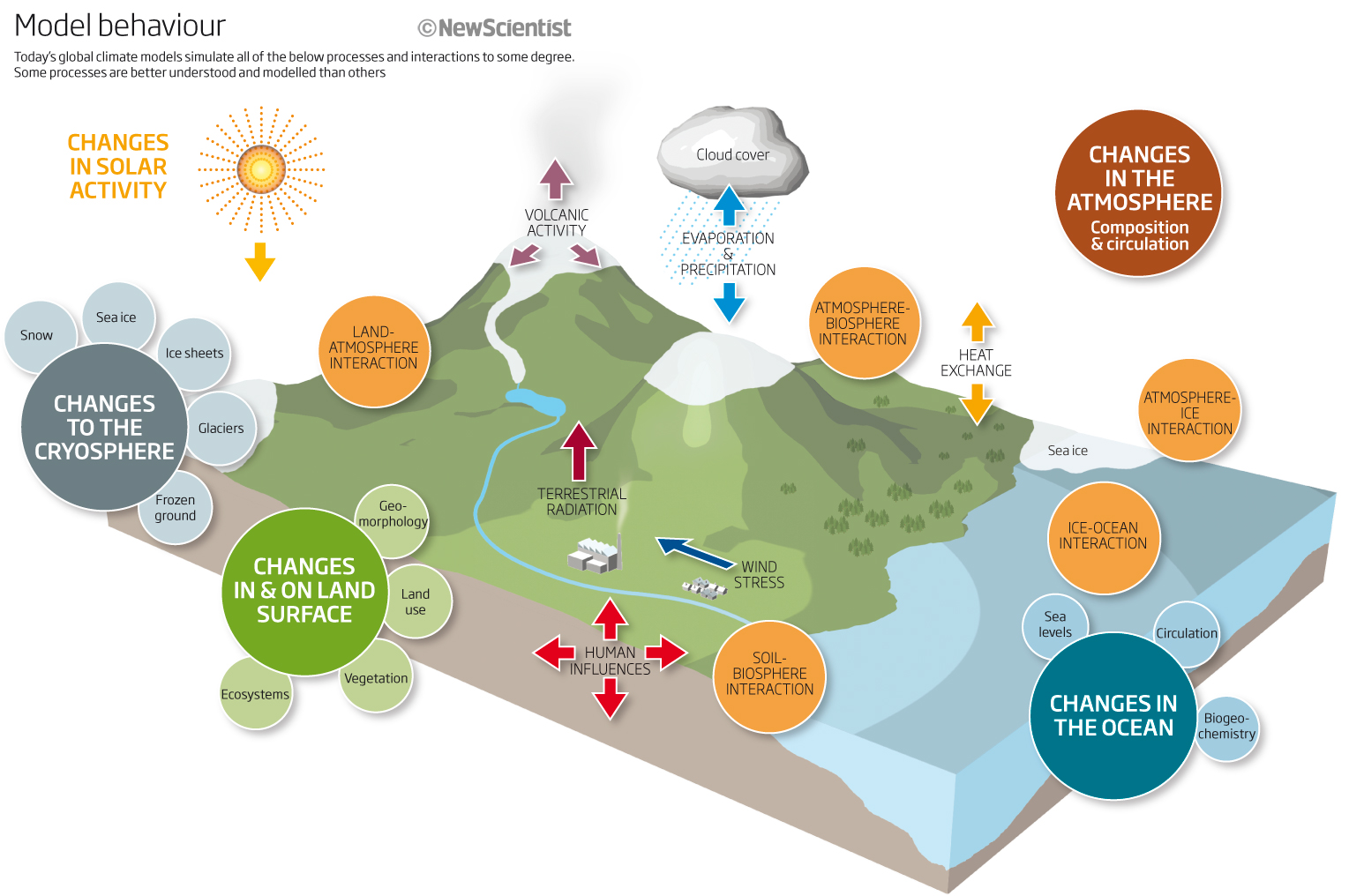

Modern climate models aim to answer such questions. Each model represents the physical, chemical and biological processes that influence Earth's climate using equations that encapsulate our best understanding of the laws governing these processes. The idea is to solve these equations to predict future climate. To make solving them easier, modellers break up the planet into chunks, or grid cells, work out the results for each cell and then collate them into a bigger picture.

Typically, the models are initialised to some well-known state. Climate modellers usually settle on the year 1860 because it represents pre-industrial conditions. Temperature records exist from that time and we know the composition of the atmosphere from air trapped inside ice cores drilled from Greenland and elsewhere. Once a model is initialised, it is made to step through time to see how the climate changes with each passing year. Modellers verify their predictions against existing measurements and refine their models, then run them further into the future to find out, say, the average global temperature or sea level in 2100.

The first models, developed in the 1970s, were simple by today's standards. They only studied the atmosphere's radiative forcing - the difference between the incoming and outgoing radiation energy - with particular emphasis on the effects of CO2. These models were then coupled to so-called slab oceans, simplistic representations of oceans as a layer of water a few tens of metres thick that absorbed and released heat but had no dynamical properties, such as ocean currents. In 1979, the US National Academy of Sciences released the first report on global warming, based on two such models. Called the Charney report, it estimated that Earth's climate sensitivity could be anywhere between 1.5 and 4.5 °C.

Since then, the IPCC has used models of increasing complexity to produce reports in 1990, 1995, 2001 and 2007. Land surfaces, with their effects on energy flow, were included in the models. Observations of the extent of sea ice were used to assess the changes in reflectivity, or albedo, of oceans, which themselves became more than mere slabs and began to be modelled to their full depths. Volcanic activity and aerosols such as sulphates were added to the atmospheric mix, and the carbon cycle came in, to capture how carbon moves back and forth between the atmosphere, land and sea. Even some of the chemistry that alters the contents of the atmosphere was included.

The modellers were concerned mainly with radiative forcing - and as such prioritised processes to be modelled based on how much they contributed to warming. "The focus has always been on, first and foremost, heat," says John Dunne of the Geophysical Fluid Dynamics Laboratory in Princeton, New Jersey.

Still, there are important phenomena missing from the IPCC's most recent report. Consider a region that starts warming. This causes the vegetation to die out, leading to desertification and an increase in dust in the atmosphere. Wind transports the dust and deposits it over the ocean, where it acts as fertiliser for plankton. The plankton grow, taking up CO2 from the atmosphere and also emitting dimethyl sulphide, an aerosol that helps form brighter and more reflective clouds, which help cool the atmosphere. This process involves carbon flow, aerosols, temperature changes, and so on, but all in specific ways not accounted for by each factor alone.

Extra complexity

Such complex processes are now being incorporated into the most sophisticated

models, including HadGEM3, developed by the UK Met Office's Hadley Centre

in Exeter. Its predictions will be used in the next IPCC report in 2014.

"We have got a whole complex cycle going on here that we didn't have before

and that could well be important for climate," says Bill Collins, project

manager for HadGEM3.

Models are not just increasing in complexity, they are also getting better at representing smaller and smaller regions of Earth. This helps assess the effect of factors such as changes in vegetation. The first IPCC report used models whose grid cells had a resolution of about 500 by 500 kilometres; the 2007 one's models had a resolution of about 110 kilometres across.

Surely, though, a higher number of parameters to measure leaves more room for uncertainty. That's true, according to Judith Curry, a climate scientist at the Georgia Institute of Technology in Atlanta. "The biggest climate model uncertainty monsters are spawned by the complexity monster," writes Curry on her blog Climate Etc. Still, today's complex models are considered far better than the early ones because they incorporate our best knowledge of the Earth and climate processes.

Even so, these uncertainties lead to somewhat different predictions about future climate from different models, and provide fuel for those who question the efficacy of modelling. Possibly the biggest source of variation and uncertainty among the models is the way they deal with phenomena on small scales. Climate scientists and critics alike see this as a concern. It stems from the use of grid cells to model climate phenomena. For processes that span many grid cells, such as long-range atmospheric circulation, the models use equations to calculate how those processes evolve over time. But their resolution is just not good enough when it comes to calculating smaller processes, such as convection currents over oceans, the behaviour of clouds, the influence of aerosols on cloud formation, the transport of water through soil, and processes that occur at the microbial scale, such as respiring bacteria releasing CO2 into the atmosphere.

In such cases, the processes are said to be parameterised: equations for them are solved outside the model and their results inserted. They then go on to influence the model's outcomes. Unfortunately, each model has its own way of parameterising sub-grid processes, leading to uncertainty in the outcome of simulations. "In terms of the behaviour of models, it's probably these parameterisations that have the biggest impact on the way a model will represent particular aspects of the climate," says Steve Woolnough of the National Centre for Atmospheric Sciences (NCAS) in Reading, UK.

This aspect was highlighted in the IPCC's 2007 report, which used a range of models to conclude that Earth would warm by between 2.5 and 4.5 °C with every doubling of CO2 concentration. "Most of that range is probably attributable to the differences in parameterisations," says Woolnough.

Another problem is the issue of whether we understand some of these processes well enough in the first place. Take, for instance, the role in a warming climate of water vapour, which is a potent greenhouse gas.

It is generally thought that the amount of water vapour the atmosphere can hold increases with temperature. What is less certain is whether this water vapour remains in the atmosphere and contributes further to warming, or quickly leaves it as precipitation. Some recent studies suggest that humidity does indeed go up with warming, leading to yet more warming. These are short-term observations, however, and whether this holds over longer time scales is an open question (Science, vol 323, p 1020).

Differing predictions

While the debate continues, climate modellers are looking for a mechanism

that could counteract any possible runaway heating due to water vapour. One

large-scale phenomenon that has the potential to do so is cloud formation.

Unfortunately, clouds are even less well understood. Clouds can have either a warming or a cooling effect depending on the extent to which they block sunlight versus their ability to stop radiation reflected off Earth's surface from escaping back into space. High, thin clouds tend to stop more outgoing than incoming radiation, so their net effect is to warm the atmosphere. Low, thick clouds do the opposite. But clouds can have holes in them and the size of their water droplets can vary, both of which affect their reflectivity. On top of all that, clouds are small - too small to be modelled adequately. More complex models such as HadGEM3 incorporate aspects of cloud behaviour, but they are far from having all the answers.

Given such uncertainty, how can we ever trust model predictions? "If you ask all the different models the same question, they'll all get it wrong in different ways," says Dunne. But that is the key to their success. It is the differences between models that help to ensure predictions are in the right ball park.

Today's models don't converge on predictions of, say, global temperature in 2100. Instead of relying on any one model, the IPCC uses an "ensemble" approach, using a slew of sophisticated models - each with its own bias - to narrow down the uncertainty. Studies have shown that the ensemble approach can outperform the predictions of any single model (Bulletin of the American Meteorological Society, vol 89, p 303).

What's more, despite all the caveats and weaknesses, one thing stands out: the prediction for Earth's climate sensitivity hasn't changed substantially from the 1979 Charney report to the IPCC's fourth assessment report in 2007. "People complain that the message hasn't changed," says Jerry Meehl of the National Center for Atmospheric Research in Boulder, Colorado. "Well, that's a good thing. If the message was changing every time we had an assessment that would make you nervous."

An even greater source of concern for climate modellers is how this warming will manifest regionally. The global mean temperature might rise by, say, 2 °C by 2100, but in north Africa it might rise by a lot more. Rainfall patterns might change dramatically from region to region, causing floods in some places and droughts elsewhere. But predicting regional level changes remains suspect. In the last IPCC report, all the big climate models were in serious disagreement when it came to predicting changes in precipitation on the sub-continental scales, let alone smaller regions. "That's where the effort needs to go," says Pier Luigi of NCAS. "That's what matters to people to manage their lives. That's the type of uncertainty we need to strive to resolve."

But creating regional models is an extremely difficult task. Still, that's what the IPCC is focusing on trying to improve.

Often forgotten in all the talk about temperature, clouds, rainfall and vegetation is the question of how the world's big ice sheets will react to a warming Earth. A lack of observational data means they are not well understood and can't be modelled in great detail. Will they melt and slide ever faster into the sea? Will they hold firm? "That's the big one. That's one that we are supremely unsuited to address well," says Dunne. "It's a big source of uncertainty if you want to know sea level."

And as I found out, that is the case with all things climate. If you want to know how the climate will be no further ahead than the next decade, natural variability in systems such as the El Niño/La Niña effect will trump any uncertainty in climate models. If you want to understand how the Earth will be in 50 years time, the decadal variations get averaged out and the uncertainty in climate models starts to rear its head, so improving our models will help us better predict the climate in 2060.

By 2100, however, both natural variability and the uncertainty in our models will make way for something that is far more uncertain: anthropogenic emissions. Will we get serious about cutting emissions, or continue with business as usual, or actually increase our emissions? "By late in the century, our choices come to dominate," says Richard Alley of the Pennsylvania State University at University Park, "and whatever you do with the climate models to make them better doesn't really matter that much".

Anil Ananthaswamy is a consultant for New Scientist